This is especially so in the field of bio-imaging, where the raw data is often voluminous, yet extremely variable and difficult to study. However, one persistent challenge in deep learning assisted scientific discovery is that the workings of artificial neural networks are often difficult to interpret.

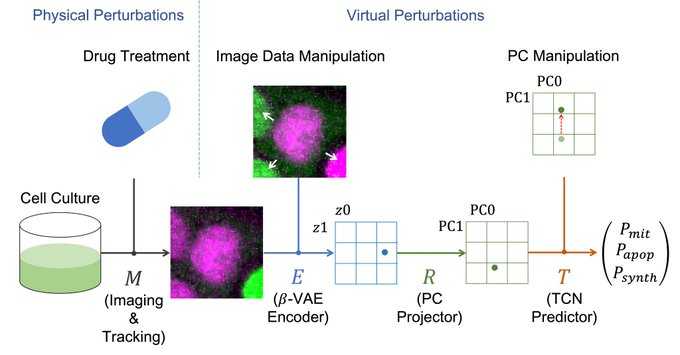

Here we present a simple technique for investigating the behaviour of trained neural networks: virtual perturbation. By making precise and systematic alterations to input data or internal representations thereof, we are able to discover causal relationships in the outputs of a deep learning model, and by extension, in the underlying phenomenon itself. As an exemplar, we use our recently described deep-learning based cell fate prediction model. We trained the network to predict the fate of less fit cells in an experimental model of mechanical cell competition.

By applying virtual perturbation to the trained network, we discover causal relationships between a cell's environment and eventual fate. We compare these with known properties of the biological system under investigation to demonstrate that the model faithfully captures insights previously established by experimental research.